The Unreasonable Effectiveness of Doing the Definitive Industry Survey

and the #1 thing that bad surveys do wrong

Every industry has 1-2 definitive surveys. You can and should make your own, if you have the resources and network to do it well.

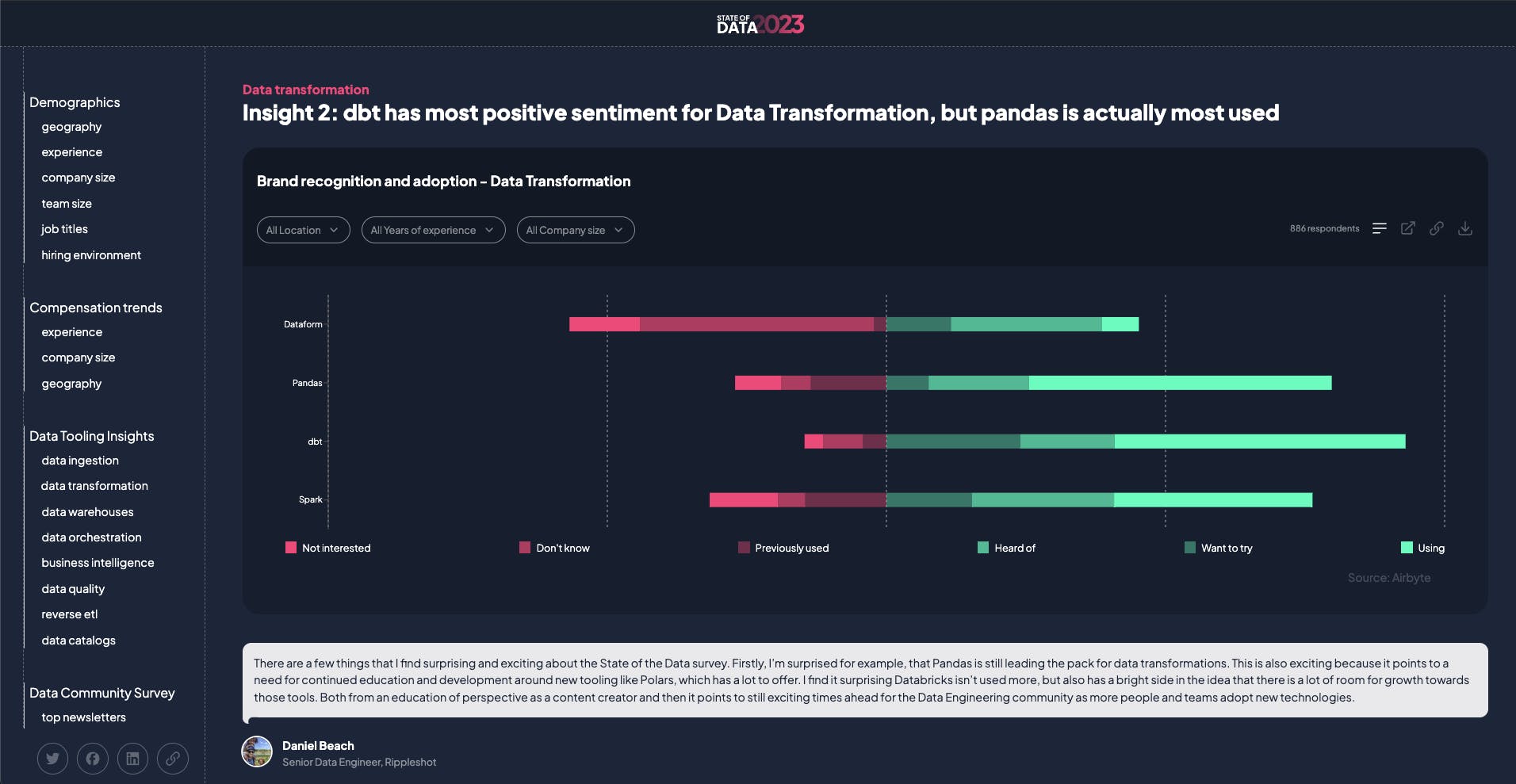

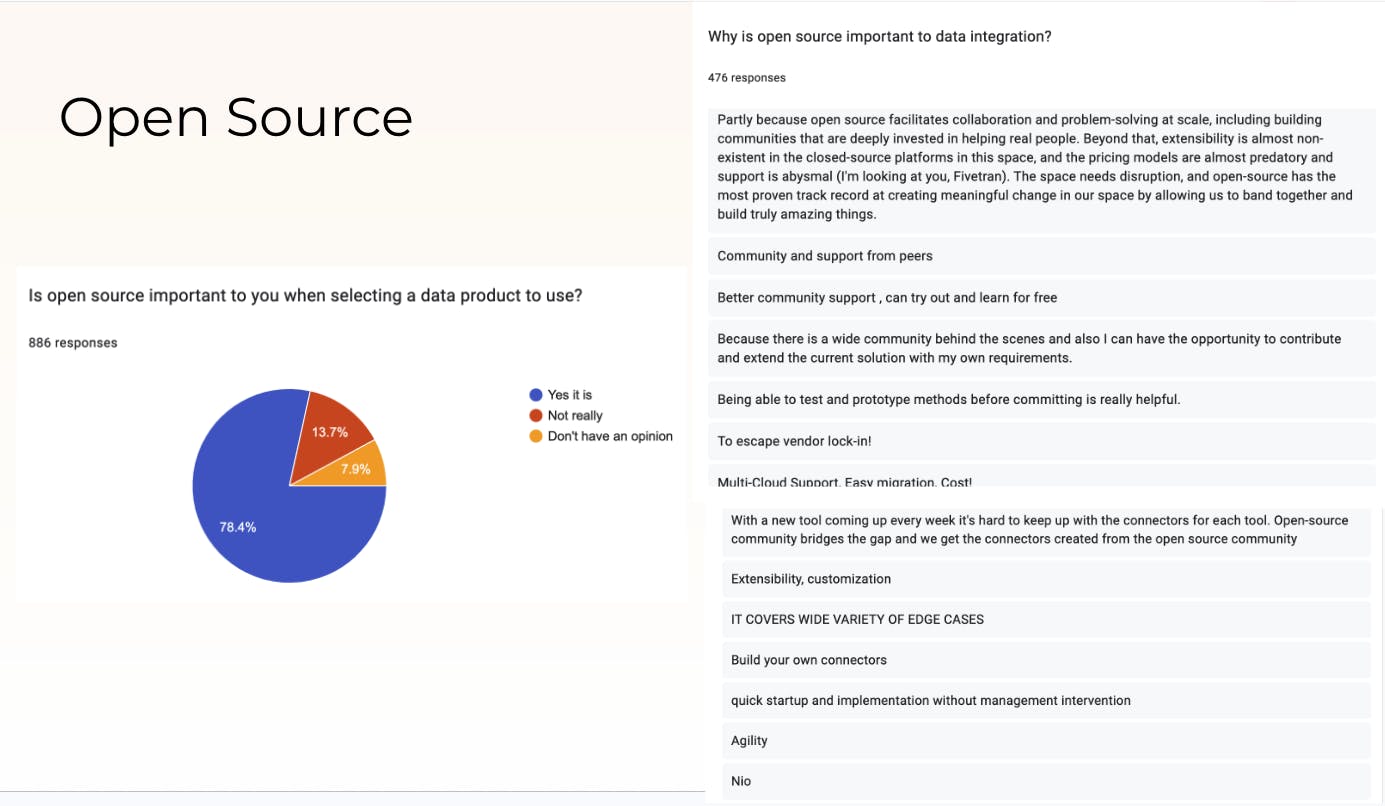

I was recently part of the Airbyte team that produced the State of Data survey. (UPDATE: I am now also running the State of AI Engineering survey!)

At 886 participants, it was actually the biggest survey ever done of the rising data engineering industry.

I've been asked often about why and how we did this and have never written it down, so here goes.

The Core Benefit

Industry Surveys serve the same community you serve, providing an imperfect snapshot that is nevertheless data-driven. They help your community:

understand discover new tools/media/communities

see trends over time

where they stand relative to their peers

The benefit of you running this survey is it helps people see the world the same way you do. Cynically, this can be read as propaganda, but when done well, it basically gives real data for your biggest fans to back up their arguments, and for fence-sitters to at least take notice.

Why it works

We are all blind men touching different parts of the elephant, but beggars can't be choosers.

"When lost in the desert, one welcomes the muddy well."

- African proverb

Everyone complains about the representativeness of surveys like StackOverflow (90k), GitHub (not actually a survey), and State of JS (40k) for the ~40-80m developers in the world, but the sorry fact is that most developers are dark matter, and these surveys get outsize citations compared to their representativeness because there is no other data.

Because most other devtools companies do not treat it as a priority (well, at least before this blogpost 😉 ), it is surprisingly easy to create a "State of the Art" survey that stands out in your field... if you do it right.

Why Most Surveys Suck

They are done by "marketing".

"Marketing" here is a stand-in for people who don't care or don't have as deep a connection to the problem domain as the people they actually serve. Of course this doesn't apply to all marketers, but the vast majority of professional marketers in devtools companies I've met fit this description - they are perfectly capable of checking off marketing and PR playbooks and incapable of crafting a new angle that gets devs actually excited. They do not hang out in Hacker News or Twitter, they have never used competitor tooling, they are only able to uncritically repeat back what they've heard from customers because they have no deep mental model of the real problem. They are the kind of person who treats something as a "key insight" that any practitioner would dismiss with "no shit, sherlock":

The modern "developer relations" movement is an attempt to address this by hiring developers to talk to developers, but as with all marketing efforts, this cannot scale.

But my argument isn't really about the identity of the people who do the survey - I of all people believe that good ideas can come from anyone and anywhere - but is more about whether the survey runner, deep down, cares about the problem. The care bleeds through in everything from the choice of question, the respondents you reach out to, the way you generate insights from the data, and how it informs your subsequent actions.

Other Benefits

Cost vs Benefit. It only takes a part time commitment of 1-2 months out of a year but you can talk about it 365 days/year. Biggest cost is in the first year when you have to figure out all the questions and get people to fill out a form with no brand. Cost goes down/traction goes up in subsequent years.

Virality and Reuse. Nicely produced charts can become money shots for your keynotes at talks, eyecatching visuals for your social media images, and perhaps even better, let other people create visuals for discussing their pet favorite industry topic, linking back to you.

Lead Capture. Nobody loves handing over emails, but people are usually filling out forms for a glossy-pdf-that-couldve-been-a-blogpost

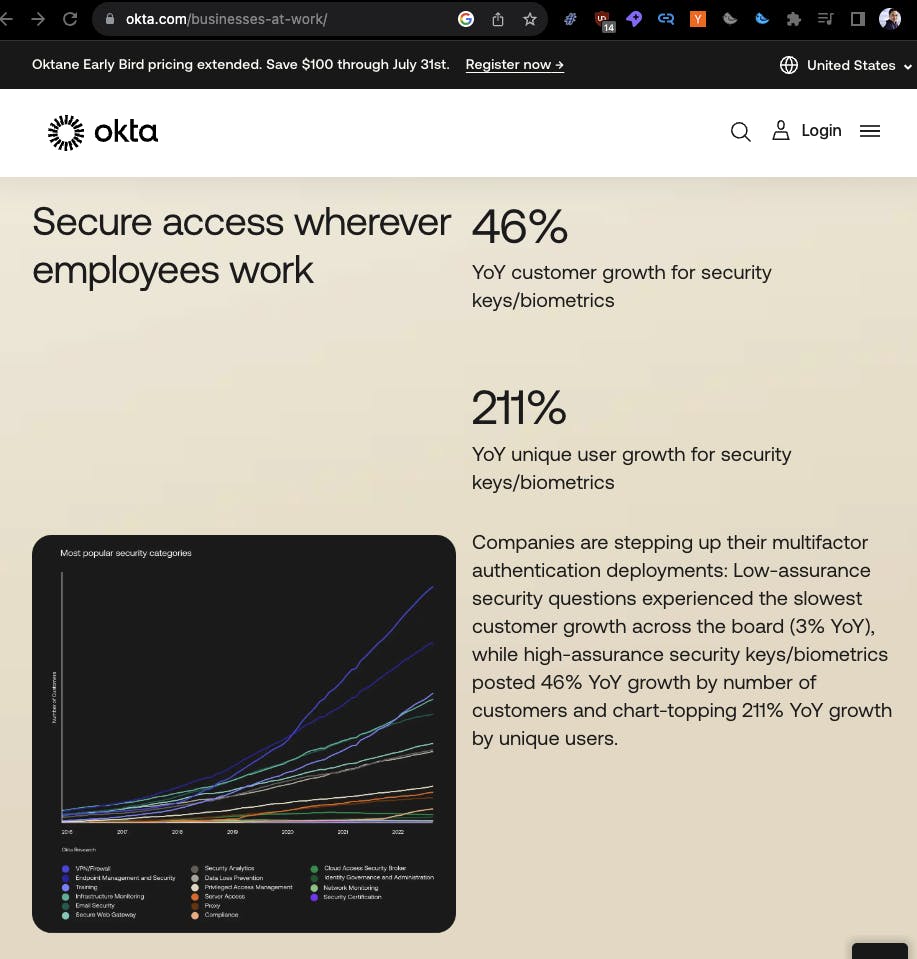

Flex your product/community. One thing I admire about Okta's Businesses at Work report is that all their data is from their product. They show trends, but trends according to Okta. It's a brilliant flex, but one only possible when you are a credible authority. If you have it, flaunt it. Airbyte had a large community, so I used that.

Inform your product/marketing. This ties into the implementation notes, but I strongly advise building reusable "DX Infrastructure" for your company. This means 1) keeping a constantly updated, ranked list of ecosystem players, newsletter, podcast, youtube, and communities so that your entire company is aligned on who/what are priorities, and 2) having a deep, informed understanding of major pain points of your target audience in quantitative and qualitative terms. Pay attention to how they describe their own pain.

Implementation Notes

An unordered (for now) list of thoughts:

Planning

You'll always want data on: Demographics (to answer representativeness questions), Compensation (drives clicks), Ecosystem/Tools (drives trends data), and Media - newsletter, podcast, youtube, and communities (informs your product/marketing, lets respondents discover new things)

You'll have to decide if you want this to be a neutral survey, or a company centric survey. There is no real middle ground here. Obviously it'll be run and funded by you, but, for example, we opted for neutral in the State of Data survey having its own https://state-of-data.com/ domain, and including competitor's names in the survey. There are many founders who believe in never mentioning their competitors, but this is not my personal default, because I think it is always better to start from a worldview shared by your users (one where you actually are one of many options) before guiding them to the promised land (where you have a monopoly on what your thing is).

Google Forms is surprisingly common for surveys, but doesnt look great. You may want to spring for Typeform if it matters (we didn't). For AI Engineering, we went with Surveymonkey because it tracks partial completions as well.

Collection

Launch the survey with influencers if you can. Our VC helped out by mentioning our survey in his newsletter, for example.

Don't fire-and-forget. Pay attention to the early responses. If they look strange, or you realized something you forgot to include/ask, you still have time to edit the questions to clarify so that your whole survey won't be ruined.

Give maybe a 3-4 week window to complete the survey, and extend for 1-2 weeks for latecomers.

Announcing early-preview results while the survey is still running among your ecosystem players can drive them to help promote your survey as a form of mutual self-interest.

We paid to promote our survey on Reddit and Twitter. Honestly not sure how much it drove completions/awareness because we didn't really track it, but it was cheap anyway.

Publishing

Just publishing a bunch of charts is fine, but narrative commentary and insights take it to the next level. I like how the State of JS has looped in influencers to write something interesting, but you have to give space for good writers to expand.

Here is where you can get your PR team involved to do a press release, and go on a media blitz to talk about what you learned from the results.

Positive Examples (tbc)

I'll add to this list over time, pls feel free to suggest ones you like.

Smaller ones that are still noteworthy/have good ideas to steal

TimeScaleDB's State of Postgres survey was beautifully designed and had decent marketing ROI